The Best LLMs For Autonomous Agents: A Comparison Across Accuracy, Speed, and Cost

Llama 3.1 70B from Fireworks AI takes the cake as the best model to use for running an autonomous agent

Note: this article is recreated from its original publication on locusive.com

Updated September 28, 2024 to include the results of or tests run with Groq under higher rate limits

—

Locusive provides AI-powered copilots for businesses that make it easy for your employees and customers to get the answers they need from your data sources without having to bug your team. While our copilot makes it easy for you to query your different data sources, it uses a lot of data pipelines and technologies under the hood, the most important of which is a large-language model, or LLM, which is used to power the entire system.

LLMs are the brains behind most AI copilots and agents, and selecting the LLMs to use in your copilot is one of the most important choices you can make when you implement your own AI assistant. The wrong one can frustrate your users, cost you money, and worse of all, provide your customers with bad data. The best ones can delight your customers with fast and accurate responses without costing you a ton of money.

Today, there are a large number of LLMs and LLM providers to choose from, each of which has their own strengths and weaknesses. As a company building AI copilots for other businesses, we’re always looking to optimize the speed and accuracy of our product, so we analyzed ten different LLM systems to find the best system (or combination of systems) for our assistant.

In this article, we’re providing the results of our analysis, complete with methodology and thoughts on how things are likely to change in the future.

Let’s dive in.

Key Findings

At A Glance

Before we delve into the details, here’s a quick overview of our most significant findings:

Our overall winner across speed, accuracy, and cost is Llama 3.1 70B provided by Fireworks AI.

This model had a perfect accuracy score across our tests, produced some of the fastest responses for the user, and is extremely cost-effective when running in an agent. Based on our results, we’ve implemented this model as the main model powering Locusive’s copilot, and recommend it for anyone who’s building an autonomous agent that requires multiple requests to handle complicated user requests.

*Groq offered us higher rate limits to complete this test, and while we were able to complete one test suite fully, we were unable to complete the second one, so the results here are from a single test suite run, and costs have been extrapolated based on thos results

**Cerebras limits requests to an 8K context window, which may affect accuracy, speed, and costs

***SambaNova limits requests to an 8K context window and 1K outputs, which may affect accuracy, speed, and costs

—

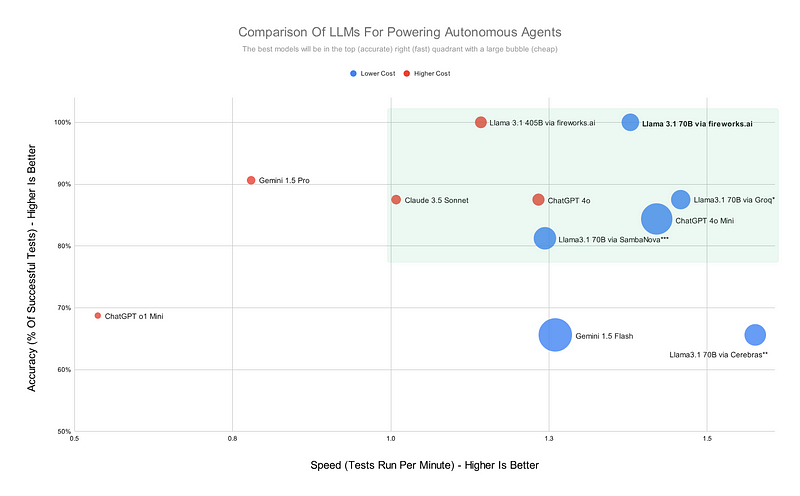

The graph above shows a comparison of all of the models we tested compared against each other along three primary dimensions:

Accuracy (y-axis)

Speed (x-axis)

Cost (bubble size)

The best models are in the top right quadrant, inside the green rectangle. The ideal model would be in the very top right corner with a large bubble. In our analysis, Llama 3.1 70B from Fireworks AI was the closest to this ideal. Below, we’ll provide additional detail on our methodology and results, but before we do, it’s important to understand how LLMs power autonomous agents.

AI Copilots and LLMs:

A Quick Overview

AI copilots, like the one we’re creating at Locusive, rely heavily on Large Language Models (LLMs) for their core functionality. Unlike simple chatbots or retrieval augmented generation (RAG) systems, these copilots have to continuously make requests to an LLM behind the scenes when a user makes a request:

Interpret user requests

Choose appropriate tools or data sources

Determine if enough information has been gathered

Formulate responses

Plan next steps if more data is needed

This continuous cycle demands an LLM that excels in rapid, accurate decision-making, not just language generation. Key factors for LLM selection in copilots include:

Speed: To minimize lag across multiple calls

Accuracy: To ensure proper decision-making at each step

Consistency: To maintain coherent reasoning throughout a session

Cost-efficiency: Given the high frequency of LLM usage

Understanding these requirements provides crucial context for our methodology and results, which we’ll explore next.

Our Methodology:

Executing Our Agent With Known Inputs And Outputs Using Different LLMs

Our goal in evaluating these LLMs was to identify the best LLM or combination of LLMs to use in Locusive’s copilot. Because of that, we needed to create a test suite that accurately reflected the real-world scenarios our copilot encounters. This meant designing tests that not only evaluated language understanding and generation, but also the LLM’s ability to make decisions, select appropriate tools, and maintain context over multiple interactions.To achieve this, we developed an automated test suite that uses 16 different tests that mimic different types of requests that our copilot receives every day. These tests include many different scenarios including:

Querying internal databases

Searching through company knowledge bases

Interpreting and summarizing qualitative data

Answering product-specific questions

Searching the internet

Analyzing large amounts of data

The entire test suite is run in an automated and controlled manner — before each test, the relevant data sources are created, configured, and populated with data. During the execution of each test, the test framework makes a request to our agent, which then processes the request just like it would in the real world, returning the results to the test system. The test system then checks the results for accuracy (since it has knowledge of what a correct answer should look like), automatically recording which tests passed, which tests failed, the total tokens used for each test, and the total time taken for each test to run.

We ran the test suite twice for each model and averaged the results for both runs to derive our final results.

Key Metrics: Accuracy, Speed, and Cost

We focused on three critical metrics:

Accuracy: Measured as the percentage of correctly completed tasks in a test suite.

Speed: Recorded as the total time it took for the test suite to run and presented as a derived metric: total number of tests that can be run per minute.

Cost: Calculated as the total expense to run the entire test suite once and presented as the total number of times a test suite can be run per dollar.

Test Environment and Setup

Each LLM was tested using the same hardware configuration to ensure fair comparison.

We ran the full test suite twice for each model to account for potential variations.

For models in beta (Groq, Cerebras, SambaNova), we either used the dashboards provided by the provider to estimate cost (Groq) or we estimated costs based on comparable services due (Cerebras and SambaNova) to their free beta status. Note that these models all impose severe limitations on beta users that negatively affect our results. We expect these systems to be much more accurate and fast when they are out of beta.

All tests were conducted with realistic setup scenarios, including:

Pre-indexed documents in a vector database

Simulated customer database schemas

Typical API rate limits and token restrictions

By adhering to this methodology, we aimed to provide a comprehensive and fair comparison of LLM performance in the context of AI copilots. In the next section, we’ll dive into the results of our analysis.

Detailed Performance Analysis

Accuracy

In the world of AI copilots, accuracy isn’t just a nice-to-have — it’s essential. An inaccurate copilot can lead to misinformation, poor decision-making, and ultimately, a loss of user trust. In each of our automated tests, we have several different checks to ensure our copilot’s response is accurate and complete. This not only includes the content of the response itself, but also includes checking for completeness of lists, ensuring all relevant references and source files are provided, and ensuring our system responds in a reasonable amount of time. If any of these checks fail in a given test, the entire test fails. We mark a test as successful when all conditions of the test are met.

The graph below provides a comparison of how our copilot performed when powered by each model we tested.

*Groq provided us with higher rate limits than what’s generally available to complete this test, however we were only able to complete one test suite (instead of two). The results shown here reflect the results of our test for that single test suite.

**Cerebras limits us to 8K tokens, even for Llama 3.1, which can cause tests to fail if they have a large amount of data in the context window

***Our SambaNova account was limited to 8K tokens and a 1K output window, which affected the accuracy of some of our tests which deal with lots of data

—

Top Performers

Llama 3.1 70B via fireworks.ai: 100%

Llama 3.1 405B via fireworks.ai: 100%

Gemini 1.5 Pro: 91%

ChatGPT 4o: 88%

Claude 3.5 Sonnet: 88%

Llama 3.1 70B via Groq: 88%

Llama 3.1 models from fireworks.ai stood out with perfect accuracy scores, demonstrating their robust performance across our diverse test suite. Gemini 1.5 Pro and the latest offerings from OpenAI and Anthropic also showed strong results, with accuracy above 85%.

Factors Affecting Accuracy

While analyzing the results, we identified several key factors that influenced accuracy:

Token Limits: Models with higher token limits generally performed better, as they could handle more context. For instance, the Llama 3.1 70B model via Cerebras, limited to 8K tokens, achieved only 66% accuracy compared to its 100% score via fireworks.ai.

Model Size: Larger models tended to perform better, as seen with the 405B parameter version of Llama 3.1 matching the 70B version’s perfect score.

Rate Limits: Severe rate limits from the fast inference providers may have affected accuracy

Output Restrictions: SambaNova’s 1K output window limit affected accuracy in tests dealing with large amounts of data.

These results highlight the complex interplay between model capabilities and provider-specific limitations. While some models showed impressive raw performance, practical constraints like token limits and rate restrictions significantly impacted their real-world effectiveness.

In the next section, we’ll explore how these models fared in terms of speed — another crucial factor for creating responsive AI copilots.

Speed

In the world of AI copilots, a fast response is extremely important. Unfortunately, copilots that have to interact with an LLM multiple times in a single request are inherently slow, so it’s important to optimize for speed whenever possible. In our automated tests, we measure the response time for the entire test — from start to finish, since users will ultimately only see a single response from our copilot. One consequence of this is that even if an LLM produces fast output, if that output is wrong and our system needs to retry a task, it will increase the time it takes for our system to respond to the user. That’s why the fastest LLMs aren’t always the best ones to power an agent — an LLM needs to be both fast and accurate.

That said, the graph below measures the median test completion duration in seconds across our automated tests for each model.

*Groq provided us with higher rate limits than what’s generally available to complete this test, however we were only able to complete one test suite (instead of two). The results shown here reflect the results of our test for that single test suite.

**Cerebras limits us to 8K tokens, even for Llama 3.1, which may artificially decrease response times

***SambaNova limits us to 8K tokens and 1K output, which means we cannot handle as much data in each request, which may artificially decrease response times

—

Top Speed Performers

Gemini 1.5 Flash: 22.9s

Llama 3.1 70B via Cerebras: 38.1s

Llama 3.1 70B via Groq: 41.2s

ChatGPT 4o Mini: 42.3s

Llama 3.1 70B via fireworks.ai: 44s

Note that, while Gemini was the fastest model, it was also one of the least accurate.

Interestingly, larger models didn’t always mean slower responses. The 405B parameter version of Llama 3.1 was only marginally slower than its 70B counterpart (53s vs. 44s via fireworks.ai), and even the 70B model from Fireworks was among one of the fastest performers, likely its high accuracy meant there were fewer retries or erroneous tool invocations.

These findings underscore the importance of considering the entire ecosystem when selecting an LLM for your copilot. While raw model performance is crucial, factors like rate limits and token restrictions can significantly impact real-world speed.In the next section, we’ll explore the final piece of our analysis puzzle: cost. As we’ll see, achieving the right balance of accuracy and speed doesn’t have to break the bank.

Cost

While accuracy and speed are crucial, the cost of running an AI copilot can make or break its viability for businesses. AI copilots are new, and consume a lot of tokens when communicating with their underlying LLMs. New copilot developers must be conscientious of their costs. On top of that, the lower we can get our costs, the better our pricing can be for our customers.

The graph below shows the average cost to run our test suite once when our copilot is powered by different models.

*Prices for Groq were given in the Groq dashboard but not actually charged. Additionally, only one test suite could be successfully completed with Groq, so the average price shown here reflects the price of the one test suite that we could run.

**We used the free beta version of Cerebras, so we estimated costs using Fireworks pricing and token counts produced from Cerebras testing

***We used the free beta version of SambaNova, so we estimated costs using Fireworks pricing and token counts produced from SambaNova testing

—

Most Cost-Effective Models

Gemini 1.5 Flash: $0.09 per test suite

ChatGPT 4o Mini: $0.11 per test suite

Llama 3.1 70B via SambaNova: $0.28 per test suite

Llama 3.1 70B via Cerebras: $0.29 per test suite

Llama 3.1 70B via Groq: $0.39 per test suite

Gemini 1.5 Flash and ChatGPT 4o Mini stand out as the most budget-friendly options. However, it’s crucial to remember that cost-effectiveness must be balanced with accuracy and speed for optimal copilot performance. For models in beta or free versions, we had to estimate costs based on comparable services, we used Fireworks pricing and token counts from their respective tests.

It’s worth noting that our overall winner, Llama 3.1 70B via Fireworks AI, comes in at $0.52 per test suite. While not the cheapest option, it offers an excellent balance of performance and cost.

Factors Influencing Cost

Token Usage: Models that require fewer tokens to complete tasks tend to be more cost-effective.

Retry Rate: More accurate models may be more cost-effective in practice, as they require fewer retries to complete tasks successfully.

Provider Pricing Models: Different providers have varying pricing structures, which can significantly impact overall costs.

These findings highlight the importance of considering not just the raw cost per token, but also how a model’s accuracy and efficiency can affect the total cost of operation for an AI copilot.

In the next section, we’ll synthesize our findings across accuracy, speed, and cost to provide our overall recommendations for LLM selection in AI copilots.

Insights and Observations

Our analysis of various LLMs has yielded several key insights that can guide businesses in selecting the right model for their AI copilots. Here are some of the most interesting observations from our study.

Model Size: Bigger Isn’t Always Better

When comparing different sizes of the same model family, we found some intriguing results:

Llama 3.1 70B vs 405B: Both achieved 100% accuracy in our tests, but the 70B version was marginally faster (44s vs 53s) and significantly more cost-effective ($0.52 vs $1.82 per test suite).

This suggests that while larger models can offer impressive capabilities, they may not always be necessary for typical copilot tasks. The extra parameters of the 405B model didn’t translate to meaningful performance gains in our tests, making the 70B version a more efficient choice for most applications.

The Potential of Beta Providers

While Groq, Cerebras, and SambaNova showed limitations in our tests due to their rate and token limitations, they also seem to be among the fastest and cheapest models to use. They’re built to provide fast inference and they already support Llama 3. We believe that as these model providers come out of beta, it’s likely their accuracy will reach state of the art, and their fast inference technology will power speedy interactions, which could lead them to become some of the most performant models.

Models Optimized For Reasoning

ChatGPT’s latest model, “o1 Mini”, was among the models we tested. Surprisingly, it had a lower accuracy score than most of the other models (not to mention its much slower speed and accuracy). While it’s unclear exactly why this happened, given the emphasis that OpenAI placed on improving O1’s reasoning skills, our suspicion is that it’s because these models did not respond before our agent terminated its processing and that they didn’t follow output directions properly. It’s likely that OpenAI, and other LLM providers, will continue to optimize these models for not only speed but also instruction following, and they are likely to perform significantly better on future tests.

Data Copilots For Your Business

We’re big believers in the capability for AI copilots to improve user experiences, automate workflows, and analyze and retrieve data. At Locusive, we’re creating these copilots for your employees and customers. If you’d like to empower your users and customers to pull their own data and answer their own questions without having to invest in building your own copilots, you might benefit from using Locusive. Feel free to book a demo to see how we can help with your business today.